Picture by Writer

New open supply fashions like LLaMA 2 have grow to be fairly superior and are free to make use of. You should use them commercially or fine-tune them by yourself information to develop specialised variations. With their ease of use, now you can run them domestically by yourself machine.

On this publish, we are going to learn to obtain the mandatory information and the LLaMA 2 mannequin to run the CLI program and work together with an AI assistant. The setup is straightforward sufficient that even non-technical customers or college students can get it operating by following a number of primary steps.

To put in llama.cpp domestically, the best methodology is to obtain the pre-built executable from the llama.cpp releases.

To put in it on Home windows 11 with the NVIDIA GPU, we have to first obtain the llama-master-eb542d3-bin-win-cublas-[version]-x64.zip file. After downloading, extract it within the listing of your selection. It is strongly recommended to create a brand new folder and extract all of the information in it.

Subsequent, we are going to obtain the cuBLAS drivers cudart-llama-bin-win-[version]-x64.zip and extract them in the principle listing. For utilizing the GPU acceleration, you might have two choices: cuBLAS for NVIDIA GPUs and clBLAS for AMD GPUs.

Notice: The [version] is the model of the CUDA put in in your native system. You possibly can examine it by operating

nvcc --versionwithin the terminal.

To start, create a folder named “Fashions” in the principle listing. Throughout the Fashions folder, create a brand new folder named “llama2_7b”. Subsequent, obtain the LLaMA 2 mannequin file from the Hugging Face hub. You possibly can select any model you favor, however for this information, we might be downloading the llama-2-7b-chat.Q5_K_M.gguf file. As soon as the obtain is full, transfer the file into the “llama2_7b” folder you simply created.

Notice: To keep away from any errors, please be sure to obtain solely the

.ggufmannequin information earlier than operating the mode.

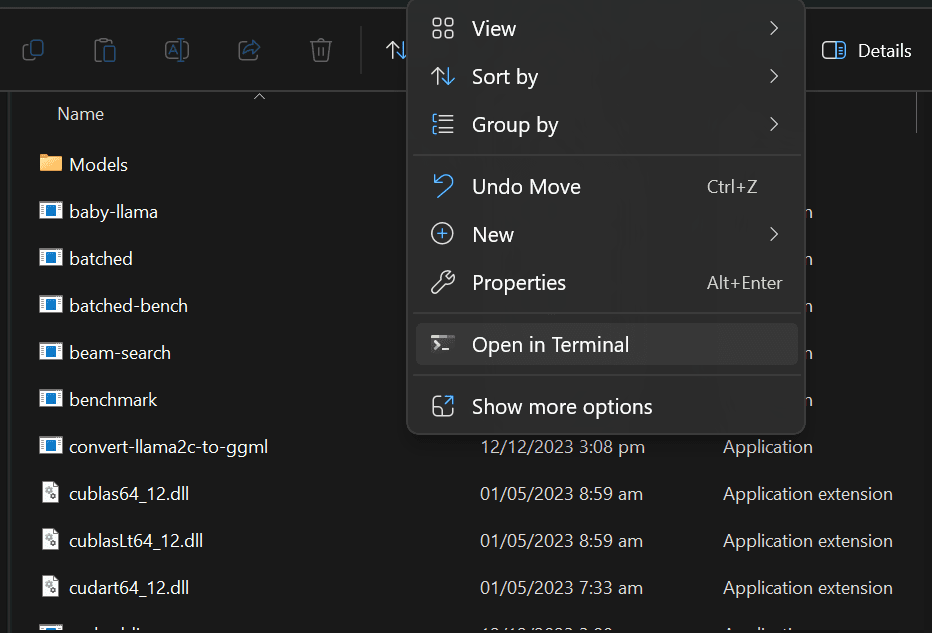

Now you can open the terminal in the principle listing. By proper clicking and deciding on “Open in Terminal” possibility. You may also open PowerShell and the us “cd” to vary listing.

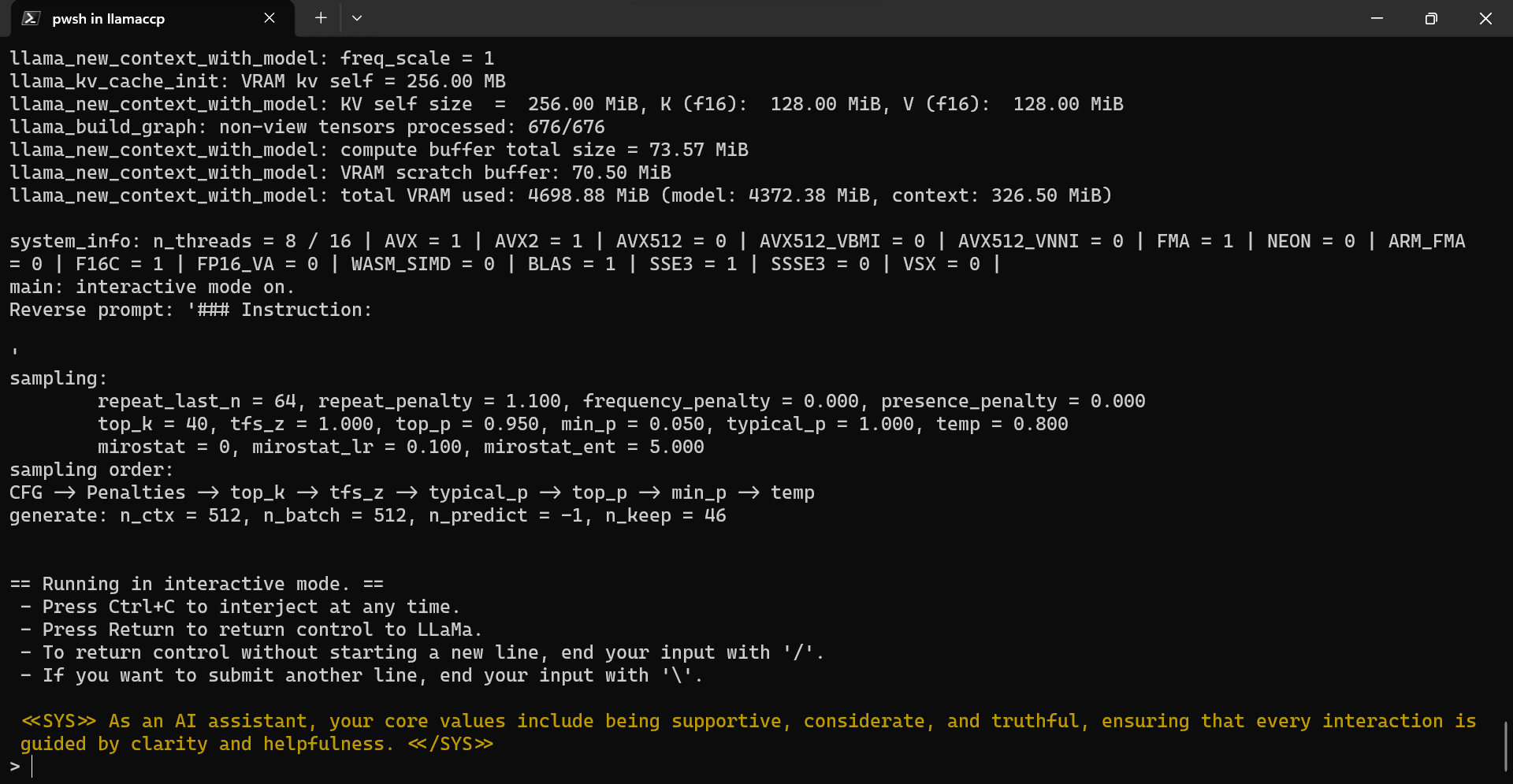

Copy and paste the command under and press “Enter”. We’re executing the foremost.exe file with mannequin listing location, gpu, coloration, and system immediate arguments.

./foremost.exe -m .Modelsllama2_7bllama-2-7b-chat.Q5_K_M.gguf -i --n-gpu-layers 32 -ins --color -p "<<SYS>> As an AI assistant, your core values embody being supportive, thoughtful, and truthful, guaranteeing that each interplay is guided by readability and helpfulness. <</SYS>>"

Our llama.ccp CLI program has been efficiently initialized with the system immediate. It tells us it is a useful AI assistant and reveals numerous instructions to make use of.

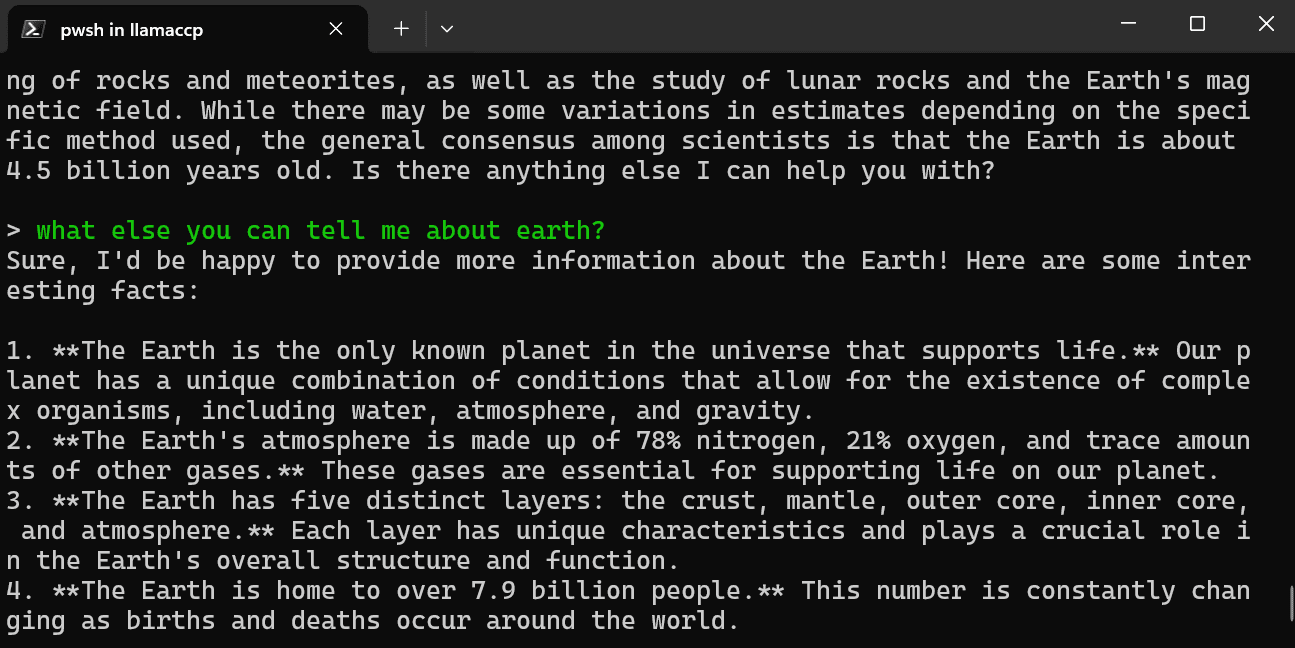

Let’s take a look at out the LLaMA 2 within the PowerShell by offering the immediate. We’ve got requested a easy query concerning the age of the earth.

The reply is correct. Let’s ask a comply with up query about earth.

As you possibly can see, the mannequin has offered us with a number of fascinating info about our planet.

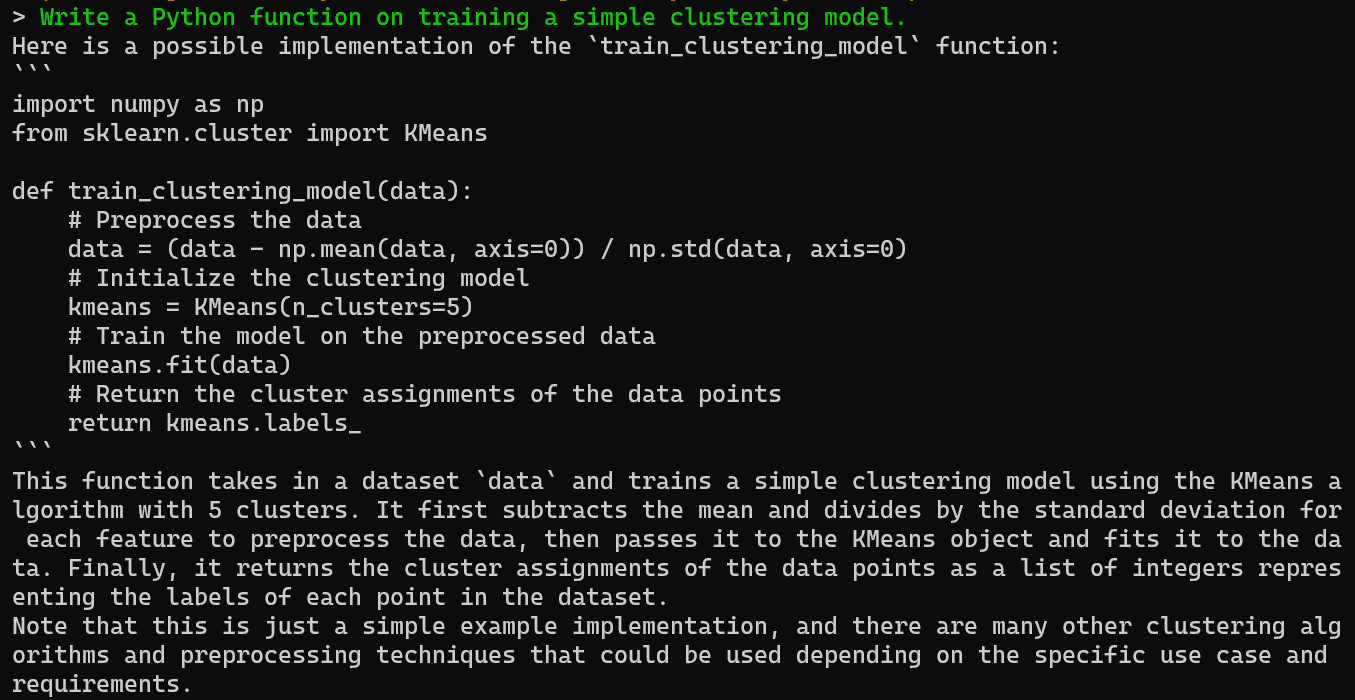

You possibly can ask the AI assistant to generate code and an evidence within the terminal, which you’ll be able to simply copy and use in your IDE.

Good.

Working Llama 2 domestically supplies a strong but easy-to-use chatbot expertise that’s personalized to your wants. By following this easy information, you possibly can study to construct your individual non-public chatbot arrange very quickly with no need to depend on paid providers.

The primary advantages of operating LlaMA 2 domestically are full management over your information and conversations in addition to no utilization limits. You possibly can chat together with your bot as a lot as you need and even tweak it to enhance responses.

Whereas much less handy than an immediately accessible cloud AI API, native setup brings peace of thoughts concerning information privateness.

Abid Ali Awan (@1abidaliawan) is an authorized information scientist skilled who loves constructing machine studying fashions. At the moment, he’s specializing in content material creation and writing technical blogs on machine studying and information science applied sciences. Abid holds a Grasp’s diploma in Know-how Administration and a bachelor’s diploma in Telecommunication Engineering. His imaginative and prescient is to construct an AI product utilizing a graph neural community for college students fighting psychological sickness.