Picture by Creator

Gemini is a brand new mannequin developed by Google, and Bard is changing into usable once more. With Gemini, it’s now potential to get virtually excellent solutions to your queries by offering them with photos, audio, and textual content.

On this tutorial, we’ll study concerning the Gemini API and the right way to set it up in your machine. We can even discover varied Python API capabilities, together with textual content era and picture understanding.

Gemini is a brand new AI mannequin developed via collaboration between groups at Google, together with Google Analysis and Google DeepMind. It was constructed particularly to be multimodal, that means it could possibly perceive and work with various kinds of knowledge like textual content, code, audio, photos, and video.

Gemini is essentially the most superior and largest AI mannequin developed by Google so far. It has been designed to be extremely versatile in order that it could possibly function effectively on a variety of techniques, from knowledge facilities to cellular gadgets. Which means it has the potential to revolutionize the best way wherein companies and builders can construct and scale AI purposes.

Listed below are three variations of the Gemini mannequin designed for various use circumstances:

- Gemini Extremely: Largest and most superior AI able to performing complicated duties.

- Gemini Professional: A balanced mannequin that has good efficiency and scalability.

- Gemini Nano: Best for cellular gadgets.

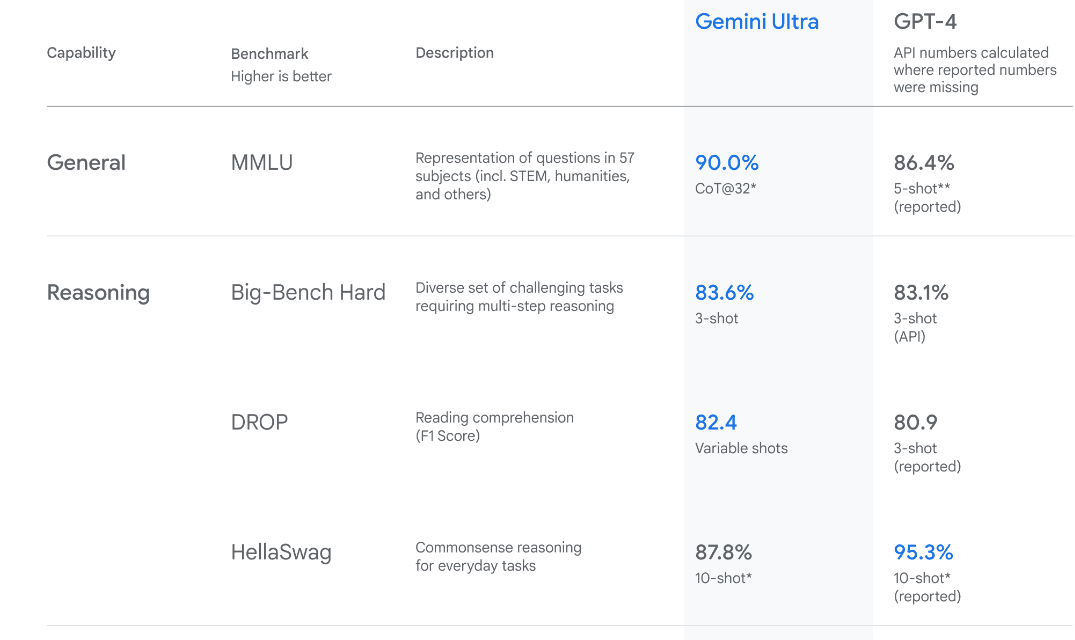

Picture from Introducing Gemini

Gemini Extremely has state-of-the-art efficiency, exceeding the efficiency of GPT-4 on a number of metrics. It’s the first mannequin to outperform human specialists on the Huge Multitask Language Understanding benchmark, which checks world data and drawback fixing throughout 57 various topics. This showcases its superior understanding and problem-solving capabilities.

To make use of the API, we’ve got to first get an API key you can can from right here: https://ai.google.dev/tutorials/setup

![]()

After that click on on “Get an API key” button after which click on on “Create API key in new undertaking”.

![]()

Copy the API key and set it as an atmosphere variable. We’re utilizing Deepnote and it’s fairly straightforward for us to set the important thing with the identify “GEMINI_API_KEY”. Simply go to the mixing, scroll down and choose atmosphere variables.

![]()

Within the subsequent step, we’ll instal the Python API utilizing PIP:

pip set up -q -U google-generativeai

After that, we’ll set the API key to Google’s GenAI and provoke the occasion.

import google.generativeai as genai

import os

gemini_api_key = os.environ["GEMINI_API_KEY"]

genai.configure(api_key = gemini_api_key)

After organising the API key, utilizing the Gemini Professional mannequin to generate content material is easy. Present a immediate to the `generate_content` perform and show the output as Markdown.

from IPython.show import Markdown

mannequin = genai.GenerativeModel('gemini-pro')

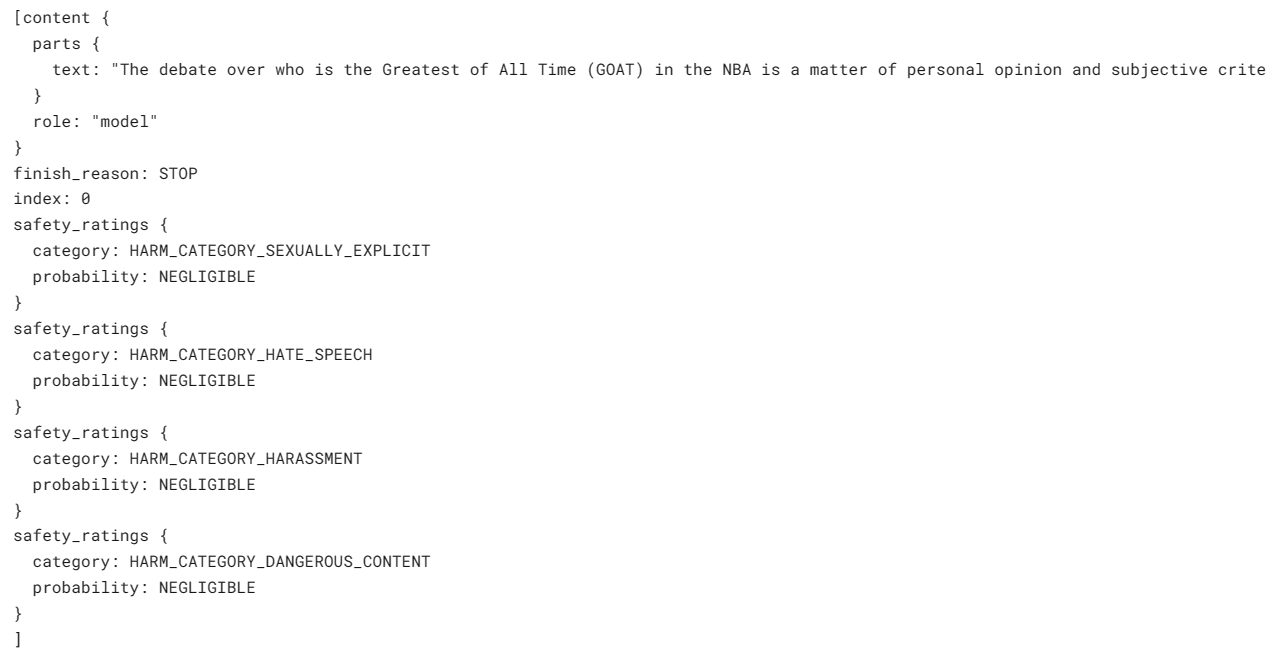

response = mannequin.generate_content("Who's the GOAT within the NBA?")

Markdown(response.textual content)

That is superb, however I do not agree with the listing. Nonetheless, I perceive that it is all about private choice.

![]()

Gemini can generate a number of responses, referred to as candidates, for a single immediate. You may choose essentially the most appropriate one. In our case, we had just one respons.

Let’s ask it to jot down a easy sport in Python.

response = mannequin.generate_content("Construct a easy sport in Python")

Markdown(response.textual content)

The result’s easy and to the purpose. Most LLMs begin to clarify the Python code as a substitute of writing it.

![]()

You may customise your response utilizing the `generation_config` argument. We’re limiting candidate rely to 1, including the cease phrase “area,” and setting max tokens and temperature.

response = mannequin.generate_content(

'Write a brief story about aliens.',

generation_config=genai.sorts.GenerationConfig(

candidate_count=1,

stop_sequences=['space'],

max_output_tokens=200,

temperature=0.7)

)

Markdown(response.textual content)

As you possibly can see, the response stopped earlier than the phrase “area”. Wonderful.

![]()

You too can use the `stream` argument to stream the response. It’s just like the Anthropic and OpenAI APIs however quicker.

mannequin = genai.GenerativeModel('gemini-pro')

response = mannequin.generate_content("Write a Julia perform for cleansing the information.", stream=True)

for chunk in response:

print(chunk.textual content)

![]()

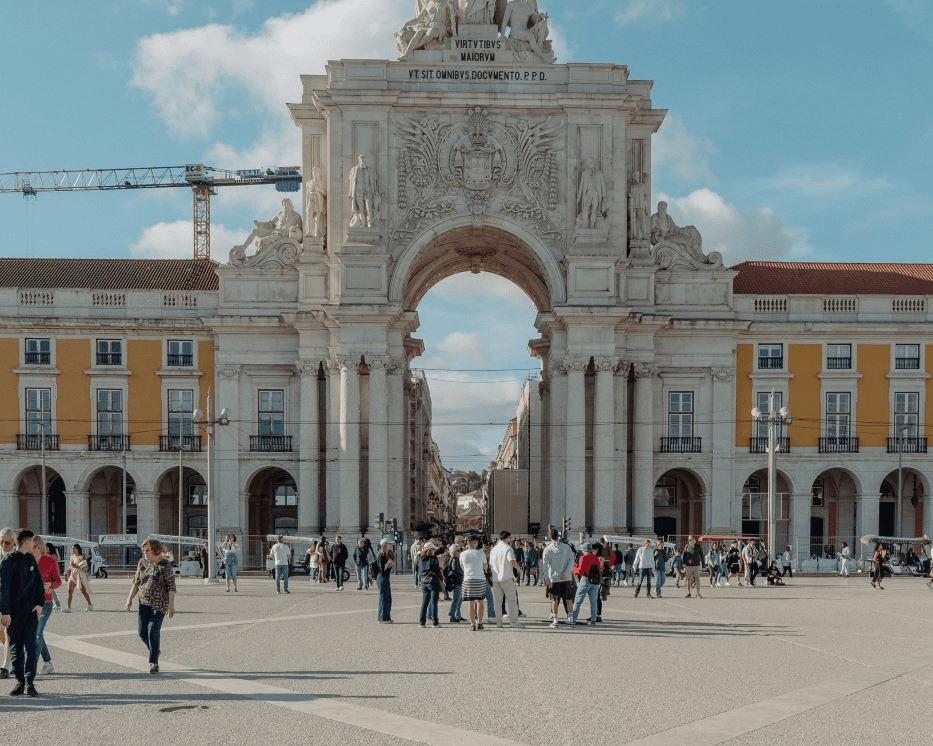

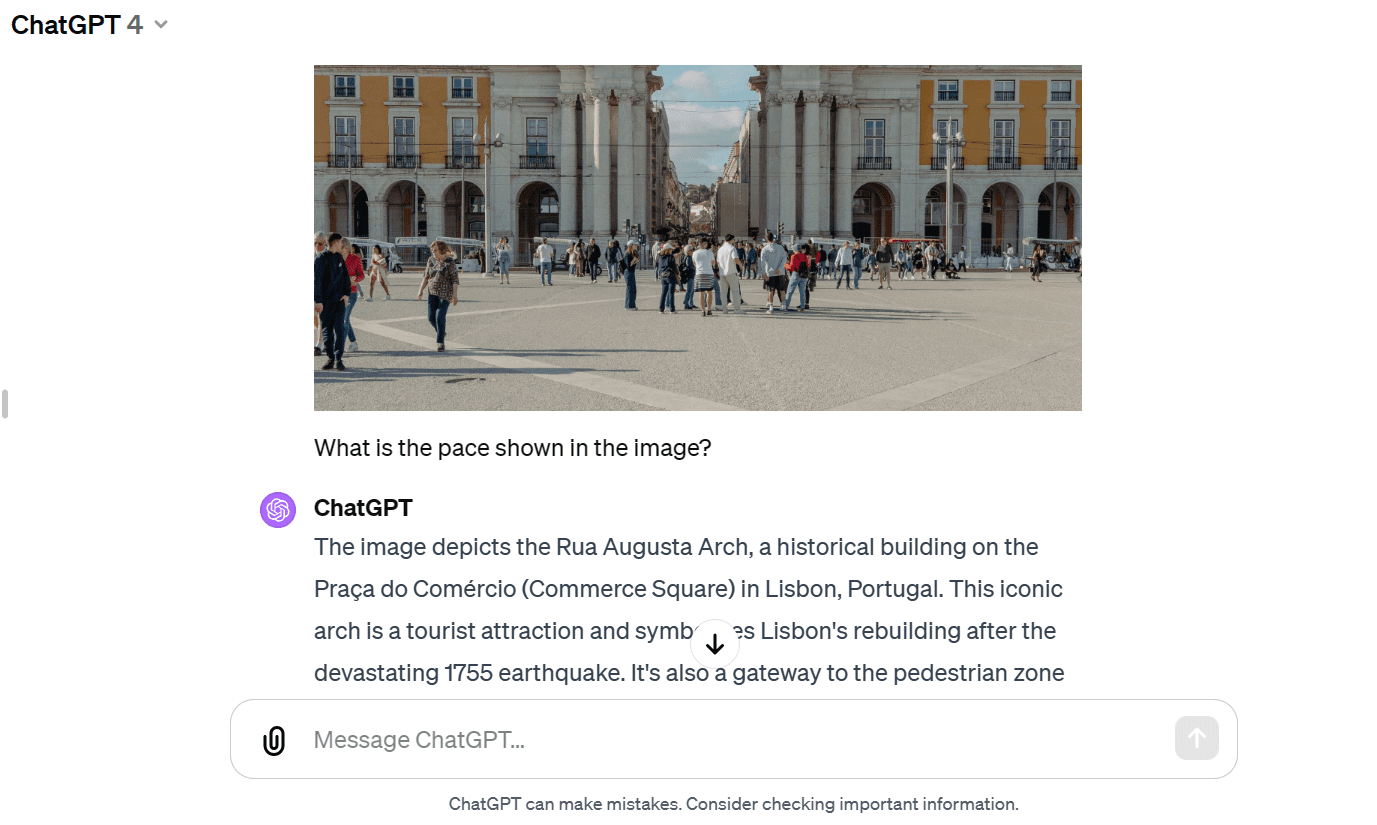

On this part, we’ll load Masood Aslami’s photograph and use it to check the multimodality of Gemini Professional Imaginative and prescient.

Load the photographs to the `PIL` and show it.

import PIL.Picture

img = PIL.Picture.open('photos/photo-1.jpg')

img

Now we have a top quality photograph of Rua Augusta Arch.

Let’s load the Gemini Professional Imaginative and prescient mannequin and supply it with the picture.

mannequin = genai.GenerativeModel('gemini-pro-vision')

response = mannequin.generate_content(img)

Markdown(response.textual content)

The mannequin precisely recognized the palace and supplied further details about its historical past and structure.

![]()

Let’s present the identical picture to the GPT-4 and ask it concerning the picture. Each fashions have supplied virtually related solutions. However I just like the GPT-4 response extra.

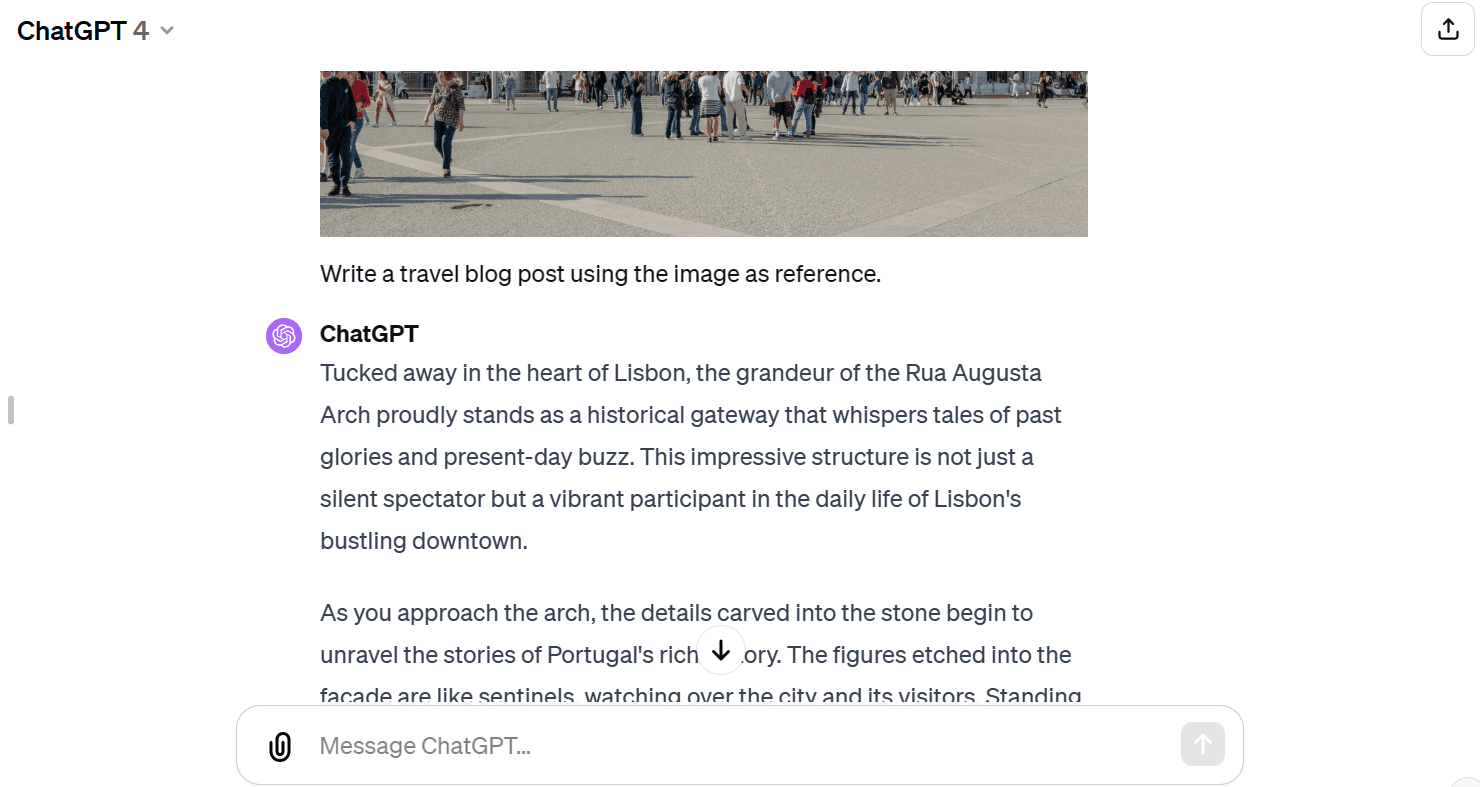

We’ll now present textual content and the picture to the API. Now we have requested the imaginative and prescient mannequin to jot down a journey weblog utilizing the picture as reference.

response = mannequin.generate_content(["Write a travel blog post using the image as reference.", img])

Markdown(response.textual content)

It has supplied me with a brief weblog. I used to be anticipating longer format.

![]()

In comparison with GPT-4, the Gemini Professional Imaginative and prescient mannequin has struggled to generate a long-format weblog.

We will arrange the mannequin to have a back-and-forth chat session. This manner, the mannequin remembers the context and response utilizing the earlier conversations.

In our case, we’ve got began the chat session and requested the mannequin to assist me get began with the Dota 2 sport.

mannequin = genai.GenerativeModel('gemini-pro')

chat = mannequin.start_chat(historical past=[])

chat.send_message("Are you able to please information me on the right way to begin taking part in Dota 2?")

chat.historical past

As you possibly can see, the `chat` object is saving the historical past of the consumer and mode chat.

![]()

We will additionally show them in a Markdown fashion.

for message in chat.historical past:

show(Markdown(f'**{message.function}**: {message.elements[0].textual content}'))

![]()

Let’s ask the comply with up query.

chat.send_message("Which Dota 2 heroes ought to I begin with?")

for message in chat.historical past:

show(Markdown(f'**{message.function}**: {message.elements[0].textual content}'))

We will scroll down and see the complete session with the mannequin.

![]()

Embedding fashions have gotten more and more in style for context-aware purposes. The Gemini embedding-001 mannequin permits phrases, sentences, or complete paperwork to be represented as dense vectors that encode semantic that means. This vector illustration makes it potential to simply examine the similarity between completely different items of textual content by evaluating their corresponding embedding vectors.

We will present the content material to `embed_content` and convert the textual content into embeddings. It’s that easy.

output = genai.embed_content(

mannequin="fashions/embedding-001",

content material="Are you able to please information me on the right way to begin taking part in Dota 2?",

task_type="retrieval_document",

title="Embedding of Dota 2 query")

print(output['embedding'][0:10])

[0.060604308, -0.023885584, -0.007826327, -0.070592545, 0.021225851, 0.043229062, 0.06876691, 0.049298503, 0.039964676, 0.08291664]

We will convert a number of chunks of textual content into embeddings by passing a listing of strings to the ‘content material’ argument.

output = genai.embed_content(

mannequin="fashions/embedding-001",

content material=[

"Can you please guide me on how to start playing Dota 2?",

"Which Dota 2 heroes should I start with?",

],

task_type="retrieval_document",

title="Embedding of Dota 2 query")

for emb in output['embedding']:

print(emb[:10])

[0.060604308, -0.023885584, -0.007826327, -0.070592545, 0.021225851, 0.043229062, 0.06876691, 0.049298503, 0.039964676, 0.08291664]

[0.04775657, -0.044990525, -0.014886052, -0.08473655, 0.04060122, 0.035374347, 0.031866882, 0.071754575, 0.042207796, 0.04577447]

For those who’re having bother reproducing the identical end result, take a look at my Deepnote workspace.

There are such a lot of superior capabilities that we did not cowl on this introductory tutorial. You may study extra concerning the Gemini API by going to the Gemini API: Quickstart with Python.

On this tutorial, we’ve got realized about Gemini and the right way to entry the Python API to generate responses. Particularly, we’ve got realized about textual content era, visible understanding, streaming, dialog historical past, customized output, and embeddings. Nonetheless, this simply scratches the floor of what Gemini can do.

Be at liberty to share with me what you could have constructed utilizing the free Gemini API. The probabilities are limitless.

Abid Ali Awan (@1abidaliawan) is a licensed knowledge scientist skilled who loves constructing machine studying fashions. Presently, he’s specializing in content material creation and writing technical blogs on machine studying and knowledge science applied sciences. Abid holds a Grasp’s diploma in Know-how Administration and a bachelor’s diploma in Telecommunication Engineering. His imaginative and prescient is to construct an AI product utilizing a graph neural community for college kids scuffling with psychological sickness.