Picture by Writer

Each machine studying mannequin that you just prepare has a set of parameters or mannequin coefficients. The objective of the machine studying algorithm—formulated as an optimization downside—is to be taught the optimum values of those parameters.

As well as, machine studying fashions even have a set of hyperparameters. Comparable to the worth of Okay, the variety of neighbors, within the Okay-Nearest Neighbors algorithm. Or the batch measurement when coaching a deep neural community, and extra.

These hyperparameters will not be realized by the mannequin. However moderately specified by the developer. They affect mannequin efficiency and are tunable. So how do you discover the most effective values for these hyperparameters? This course of known as hyperparameter optimization or hyperparameter tuning.

The 2 most typical hyperparameter tuning strategies embrace:

- Grid search

- Randomized search

On this information, we’ll find out how these strategies work and their scikit-learn implementation.

Let’s begin by coaching a easy Help Vector Machine (SVM) classifier on the wine dataset.

First, import the required modules and courses:

from sklearn import datasets

from sklearn.model_selection import train_test_split

from sklearn.svm import SVC

from sklearn.metrics import accuracy_score

The wine dataset is a part of the built-in datasets in scikit-learn. So let’s learn within the options and the goal labels as proven:

# Load the Wine dataset

wine = datasets.load_wine()

X = wine.information

y = wine.goal

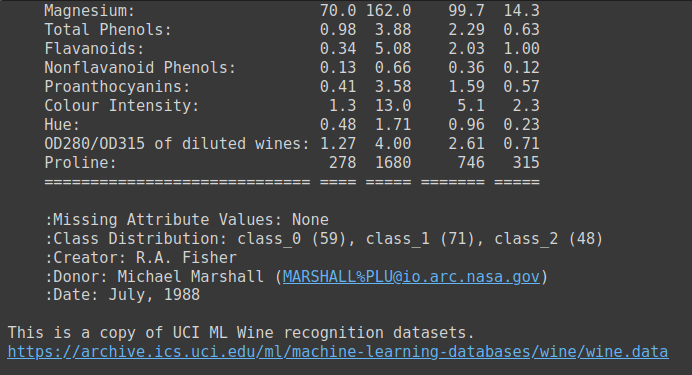

The wine dataset is an easy dataset with 13 numeric options and three output class labels. It’s a great candidate dataset to be taught your manner round multi-class classification issues. You’ll be able to run wine.DESCR to get an outline of the dataset.

Output of wine.DESCR

Subsequent, break up the dataset into prepare and take a look at units. Right here we’ve used a test_size of 0.2. So 80% of the information goes into the coaching dataset and 20% to the take a look at dataset.

# Cut up the dataset into coaching and testing units

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=24)

Now instantiate a assist vector classifier and match the mannequin to the coaching dataset. Then consider its efficiency on the take a look at set.

# Create a baseline SVM classifier

baseline_svm = SVC()

baseline_svm.match(X_train, y_train)

y_pred = baseline_svm.predict(X_test)

As a result of it’s a easy multi-classification downside, we are able to take a look at the mannequin’s accuracy.

# Consider the baseline mannequin

accuracy = accuracy_score(y_test, y_pred)

print(f"Baseline SVM Accuracy: {accuracy:.2f}")

We see that the accuracy rating of this mannequin with the default values for hyperparameters is about 0.78.

Output >>>

Baseline SVM Accuracy: 0.78

Right here we used a random_state of 24. For a special random state you’re going to get a special coaching take a look at break up, and subsequently completely different accuracy rating.

So we’d like a greater manner than a single train-test break up to judge the mannequin’s efficiency. Maybe, prepare the mannequin on many such splits and contemplate the common accuracy. Whereas additionally making an attempt out completely different combos of hyperparameters? Sure, that’s the reason we use cross validation in mannequin analysis and hyperparameter search. We’ll be taught extra within the following sections.

Subsequent let’s establish the hyperparameters that we cantune for this assist vector machine classifier.

In hyperparameter tuning, we goal to seek out the most effective mixture of hyperparameter values for our SVM classifier. The generally tuned hyperparameters for the assist vector classifier embrace:

- C: Regularization parameter, controlling the trade-off between maximizing the margin and minimizing classification error.

- kernel: Specifies the kind of kernel perform to make use of (e.g., ‘linear,’ ‘rbf,’ ‘poly’).

- gamma: Kernel coefficient for ‘rbf’ and ‘poly’ kernels.

Cross-validation helps assess how nicely the mannequin generalizes to unseen information and reduces the danger of overfitting to a single train-test break up. The generally used k-fold cross-validation entails splitting the dataset into ok equally sized folds. The mannequin is educated ok occasions, with every fold serving because the validation set as soon as and the remaining folds because the coaching set. So for every fold, we’ll get a cross-validation accuracy.

After we run the grid and randomized searches for locating the most effective hyperparameters, we’ll select the hyperparameters based mostly on the most effective common cross-validation rating.

Grid search is a hyperparameter tuning approach that performs an exhaustive search over a specified hyperparameter house to seek out the mix of hyperparameters that yields the most effective mannequin efficiency.

How Grid Search Works

We outline the hyperparameter search house as a parameter grid. The parameter grid is a dictionary the place you specify every hyperparameter you wish to tune with an inventory of values to discover.

Grid search then systematically explores each potential mixture of hyperparameters from the parameter grid. It matches and evaluates the mannequin for every mixture utilizing cross-validation and selects the mix that yields the most effective efficiency.

Subsequent, let’s implement grid search in scikit-learn.

First, import the GridSearchCV class from scikit-learn’s model_selection module:

from sklearn.model_selection import GridSearchCV

Let’s outline the parameter grid for the SVM classifier:

# Outline the hyperparameter grid

param_grid = {

'C': [0.1, 1, 10],

'kernel': ['linear', 'rbf', 'poly'],

'gamma': [0.1, 1, 'scale', 'auto']

}

Grid search then systematically explores each potential mixture of hyperparameters from the parameter grid. For this instance, it evaluates the mannequin’s efficiency with:

Cset to 0.1, 1, and 10,kernelset to ‘linear’, ‘rbf’, and ‘poly’, andgammaset to 0.1, 1, ‘scale’, and ‘auto’.

This ends in a complete of three * 3 * 4 = 36 completely different combos to judge. Grid search matches and evaluates the mannequin for every mixture utilizing cross-validation and selects the mix that yields the most effective efficiency.

We then instantiate GridSearchCV to tune the hyperparameters of the baseline_svm:

# Create the GridSearchCV object

grid_search = GridSearchCV(estimator=baseline_svm, param_grid=param_grid, cv=5)

# Match the mannequin with the grid of hyperparameters

grid_search.match(X_train, y_train)

Word that we have used 5-fold cross-validation.

Lastly, we consider the efficiency of the most effective mannequin—with the optimum hyperparameters discovered by grid search—on the take a look at information:

# Get the most effective hyperparameters and mannequin

best_params = grid_search.best_params_

best_model = grid_search.best_estimator_

# Consider the most effective mannequin

y_pred_best = best_model.predict(X_test)

accuracy_best = accuracy_score(y_test, y_pred_best)

print(f"Finest SVM Accuracy: {accuracy_best:.2f}")

print(f"Finest Hyperparameters: {best_params}")

As seen, the mannequin achieves an accuracy rating of 0.94 for the next hyperparameters:

Output >>>

Finest SVM Accuracy: 0.94

Finest Hyperparameters: {'C': 0.1, 'gamma': 0.1, 'kernel': 'poly'}

Utilizing grid seek for hyperparameter tuning has the next benefits:

- Grid search explores all specified combos, guaranteeing you do not miss the most effective hyperparameters inside the outlined search house.

- It’s a sensible choice for exploring smaller hyperparameter areas.

On the flip aspect, nonetheless:

- Grid search could be computationally costly, particularly when coping with numerous hyperparameters and their values. It might not be possible for very complicated fashions or in depth hyperparameter searches.

Now let’s find out about randomized search.

Randomized search is one other hyperparameter tuning approach that explores random combos of hyperparameters inside specified distributions or ranges. It is notably helpful when coping with a big hyperparameter search house.

How Randomized Search Works

In randomized search, as an alternative of specifying a grid of values, you possibly can outline chance distributions or ranges for every hyperparameter. Which turns into a a lot bigger hyperparameter search house.

Randomized search then randomly samples a set variety of combos of hyperparameters from these distributions. This permits randomized search to discover a various set of hyperparameter combos effectively.

Now let’s tune the parameters of the baseline SVM classifier utilizing randomized search.

We import the RandomizedSearchCV class and outline param_dist, a a lot bigger hyperparameter search house:

from sklearn.model_selection import RandomizedSearchCV

from scipy.stats import uniform

param_dist = {

'C': uniform(0.1, 10), # Uniform distribution between 0.1 and 10

'kernel': ['linear', 'rbf', 'poly'],

'gamma': ['scale', 'auto'] + checklist(np.logspace(-3, 3, 50))

}

Just like grid search, we instantiate the randomized search mannequin to seek for the most effective hyperparameters. Right here, we set n_iter to twenty; so 20 random hyperparameter combos will probably be sampled.

# Create the RandomizedSearchCV object

randomized_search = RandomizedSearchCV(estimator=baseline_svm, param_distributions=param_dist, n_iter=20, cv=5)

randomized_search.match(X_train, y_train)

We then consider mannequin’s efficiency with the most effective hyper parameters discovered by way of randomized search:

# Get the most effective hyperparameters and mannequin

best_params_rand = randomized_search.best_params_

best_model_rand = randomized_search.best_estimator_

# Consider the most effective mannequin

y_pred_best_rand = best_model_rand.predict(X_test)

accuracy_best_rand = accuracy_score(y_test, y_pred_best_rand)

print(f"Finest SVM Accuracy: {accuracy_best_rand:.2f}")

print(f"Finest Hyperparameters: {best_params_rand}")

The most effective accuracy and optimum hyperparameters are:

Output >>>

Finest SVM Accuracy: 0.94

Finest Hyperparameters: {'C': 9.66495227534876, 'gamma': 6.25055192527397, 'kernel': 'poly'}

The parameters discovered by way of randomized search are completely different from these discovered by way of grid search. The mannequin with these hyperparameters additionally achieves an accuracy rating of 0.94.

Let’s sum up the benefits of randomized search:

- Randomized search is environment friendly when coping with numerous hyperparameters or a variety of values as a result of it does not require an exhaustive search.

- It may possibly deal with numerous parameter varieties, together with steady and discrete values.

Listed here are some limitations of randomized search:

- Resulting from its random nature, it might not at all times discover the most effective hyperparameters. Nevertheless it typically finds good ones shortly.

- In contrast to grid search, it does not assure that each one potential combos will probably be explored.

We realized how you can carry out hyperparameter tuning with RandomizedSearchCV and GridSearchCV in scikit-learn. We then evaluated our mannequin’s efficiency with the most effective hyperparameters.

In abstract, grid search exhaustively searches by way of all potential combos within the parameter grid. Whereas randomized search randomly samples hyperparameter combos.

Each these strategies assist you to establish the optimum hyperparameters to your machine studying mannequin whereas lowering the danger of overfitting to a particular train-test break up.

Bala Priya C is a developer and technical author from India. She likes working on the intersection of math, programming, information science, and content material creation. Her areas of curiosity and experience embrace DevOps, information science, and pure language processing. She enjoys studying, writing, coding, and occasional! At the moment, she’s engaged on studying and sharing her data with the developer group by authoring tutorials, how-to guides, opinion items, and extra.