Picture by Creator

Analysis metrics are just like the measuring instruments we use to grasp how effectively a machine studying mannequin is doing its job. They assist us examine completely different fashions and determine which one works greatest for a specific job. On this planet of classification issues, there are some generally used metrics to see how good a mannequin is, and it is important to know which metric is true for our particular drawback. After we grasp the small print of every metric, it turns into simpler to determine which one matches the wants of our job.

On this article, we are going to discover the essential analysis metrics utilized in classification duties and study conditions the place one metric could be extra related than others.

Earlier than we dive deep into analysis metrics, it’s essential to grasp the essential terminology related to a classification drawback.

Floor Reality Labels: These consult with the precise labels corresponding to every instance in our dataset. These are the premise of all analysis and predictions are in comparison with these values.

Predicted Labels: These are the category labels predicted utilizing the machine studying mannequin for every instance in our dataset. We examine such predictions to the bottom reality labels utilizing numerous analysis metrics to calculate if the mannequin might study the representations in our knowledge.

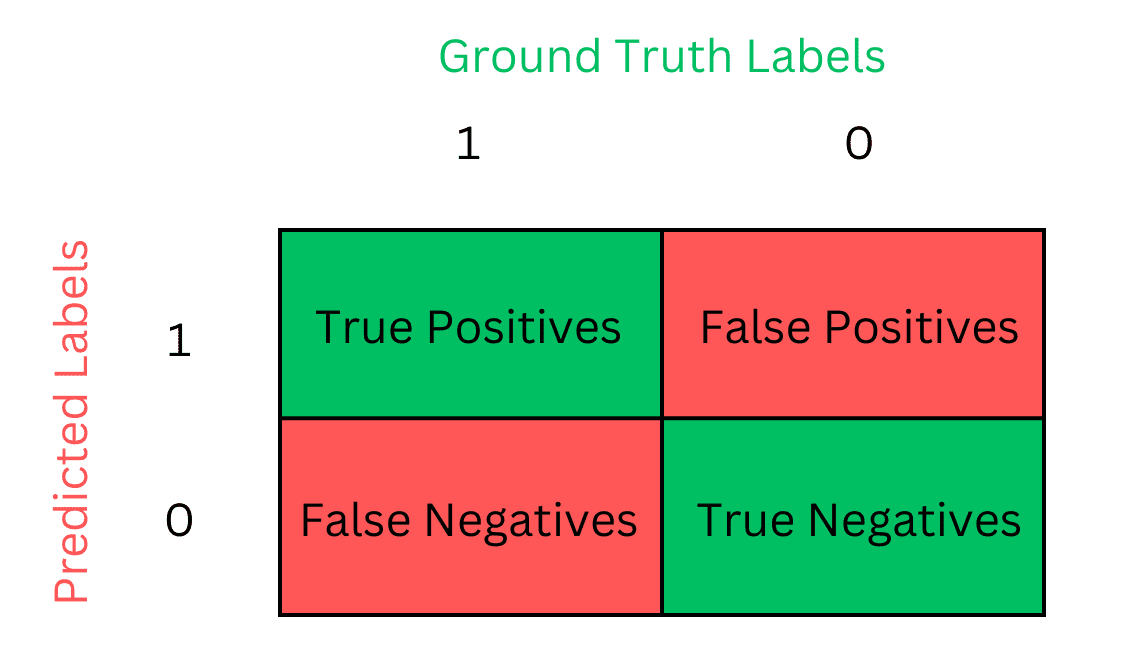

Now, allow us to solely take into account a binary classification drawback for a neater understanding. With solely two completely different courses in our dataset, evaluating floor reality labels with predicted labels may end up in one of many following 4 outcomes, as illustrated within the diagram.

Picture by Creator: Utilizing 1 to indicate a optimistic label and 0 for a detrimental label, the predictions can fall into one of many 4 classes.

True Positives: The mannequin predicts a optimistic class label when the bottom reality can also be optimistic. That is the required behaviour because the mannequin can efficiently predict a optimistic label.

False Positives: The mannequin predicts a optimistic class label when the bottom reality label is detrimental. The mannequin falsely identifies an information pattern as optimistic.

False Negatives: The mannequin predicts a detrimental class label for a optimistic instance. The mannequin falsely identifies an information pattern as detrimental.

True Negatives: The required habits as effectively. The mannequin appropriately identifies a detrimental pattern, predicting 0 for an information pattern having a floor reality label of 0.

Now, we are able to construct upon these phrases to grasp how widespread analysis metrics work.

That is the simplest but intuitive method of assessing a mannequin’s efficiency for classification issues. It measures the proportion of whole labels that the mannequin appropriately predicted.

Subsequently, accuracy will be computed as follows:

or

When to Use

Given its simplicity, accuracy is a broadly used metric. It supplies a superb start line for verifying if the mannequin can study effectively earlier than we use metrics particular to our drawback area.

Accuracy is barely appropriate for balanced datasets the place all class labels are in related proportions. If that’s not the case, and one class label considerably outnumbers the others, the mannequin should obtain excessive accuracy by all the time predicting the bulk class. The accuracy metric equally penalizes the incorrect predictions for every class, making it unsuitable for imbalanced datasets.

- When Misclassification prices are equal

Accuracy is appropriate for instances the place False Positives or False Negatives are equally dangerous. For instance, for a sentiment evaluation drawback, it’s equally dangerous if we classify a detrimental textual content as optimistic or a optimistic textual content as detrimental. For such situations, accuracy is an efficient metric.

Precision focuses on guaranteeing we get all optimistic predictions appropriate. It measures what fraction of the optimistic predictions have been really optimistic.

Mathematically, it’s represented as

When to Use

- Excessive Value of False Positives

Take into account a situation the place we’re coaching a mannequin to detect most cancers. It is going to be extra necessary for us that we don’t misclassify a affected person who doesn’t have most cancers i.e. False Constructive. We need to be assured once we make a optimistic prediction as wrongly classifying an individual as cancer-positive can result in pointless stress and bills. Subsequently, we extremely worth that we predict a optimistic label solely when the precise label is optimistic.

Take into account one other situation the place we’re constructing a search engine matching consumer queries to a dataset. In such instances, we worth that the search outcomes match intently to the consumer question. We don’t need to return any doc irrelevant to the consumer, i.e. False Constructive. Subsequently, we solely predict optimistic for paperwork that match intently to the consumer question. We worth high quality over amount as we favor a small variety of intently associated outcomes as a substitute of a excessive variety of outcomes that will or might not be related for the consumer. For such situations, we wish excessive precision.

Recall, often known as Sensitivity, measures how effectively a mannequin can keep in mind the optimistic labels within the dataset. It measures what fraction of the optimistic labels in our dataset the mannequin predicts as optimistic.

The next recall means the mannequin is healthier at remembering what knowledge samples have optimistic labels.

When to Use

- Excessive Value of False Negatives

We use Recall when lacking a optimistic label can have extreme penalties. Take into account a situation the place we’re utilizing a Machine Studying mannequin to detect bank card fraud. In such instances, early detection of points is important. We don’t need to miss a fraudulent transaction as it might probably improve losses. Therefore, we worth Recall over Precision, the place misclassification of a transaction as deceitful could also be straightforward to confirm and we are able to afford just a few false positives over false negatives.

It’s the harmonic imply of Precision and Recall. It penalizes fashions which have a big imbalance between both metric.

It’s broadly utilized in situations the place each precision and recall are necessary and permits for attaining a steadiness between each.

When to Use

In contrast to accuracy, the F1-Rating is appropriate for assessing imbalanced datasets as we’re evaluating efficiency primarily based on the mannequin’s capacity to recall the minority class whereas sustaining a excessive precision general.

- Precision-Recall Commerce-off

Each metrics are reverse to one another. Empirically, bettering one can usually result in degradation within the different. F1-Rating aids in balancing each metrics and is helpful in situations the place each Recall and Precision are equally essential. Taking each metrics under consideration for calculation, the F1-Rating is a broadly used metric for evaluating classification fashions.

We have discovered that completely different analysis metrics have particular jobs. Realizing these metrics helps us select the proper one for our job. In actual life, it isn’t nearly having good fashions; it is about having fashions that match our enterprise wants completely. So, selecting the correct metric is like selecting the best software to verify our mannequin does effectively the place it issues most.

Nonetheless confused about which metric to make use of? Beginning with accuracy is an efficient preliminary step. It supplies a primary understanding of your mannequin’s efficiency. From there, you may tailor your analysis primarily based in your particular necessities. Alternatively, take into account the F1-Rating, which serves as a flexible metric, placing a steadiness between precision and recall, making it appropriate for numerous situations. It may be your go-to software for complete classification analysis.

Muhammad Arham is a Deep Studying Engineer working in Pc Imaginative and prescient and Pure Language Processing. He has labored on the deployment and optimizations of a number of generative AI purposes that reached the worldwide high charts at Vyro.AI. He’s taken with constructing and optimizing machine studying fashions for clever programs and believes in continuous enchancment.