Machine studying fashions have grow to be an integral part of decision-making throughout a number of industries, but they typically encounter problem when coping with noisy or various knowledge units. That’s the place Ensemble Studying comes into play.

This text will demystify ensemble studying and introduce you to its highly effective random forest algorithm. Irrespective of in case you are a knowledge scientist trying to hone your toolkit or a developer in search of sensible insights into constructing sturdy machine studying fashions, this piece is supposed for everybody!

By the tip of this text, you’ll acquire an intensive information of Ensemble Studying and the way Random Forests in Python work. So whether or not you’re an skilled knowledge scientist or just curious to broaden your machine-learning skills, be a part of us on this journey and advance your machine-learning experience!

Ensemble studying is a machine studying method wherein predictions from a number of weak fashions are mixed with one another to get stronger predictions. The idea behind ensemble studying is reducing the bias and errors from single fashions by leveraging the predictive energy of every mannequin.

To have a greater instance let’s take a life instance think about that you’ve got seen an animal and also you have no idea what species this animal belongs to. So as a substitute of asking one knowledgeable, you ask ten consultants and you’ll take the vote of the vast majority of them. This is called arduous voting.

Arduous voting is after we consider the category predictions for every classifier after which classify an enter based mostly on the utmost votes to a specific class. Alternatively, tender voting is after we consider the chance predictions for every class by every classifier after which classify an enter to the category with most chance based mostly on the typical chance (averaged over the classifier’s possibilities) for that class.

Ensemble studying is all the time used to enhance the mannequin efficiency which incorporates enhancing the classification accuracy and reducing the imply absolute error for regression fashions. Along with this ensemble learners all the time yield a extra steady mannequin. Ensemble learners work at their greatest when the fashions aren’t correlated then each mannequin can study one thing distinctive and work on enhancing the general efficiency.

Though ensemble studying may be utilized in some ways, nonetheless on the subject of making use of it to follow there are three methods which have gained numerous recognition because of their simple implementation and utilization. These three methods are:

- Bagging: Bagging which is brief for bootstrap aggregation is an ensemble studying technique wherein the fashions are educated utilizing random samples of the information set.

- Stacking: Stacking which is brief for stacked generalization is an ensemble studying technique wherein we prepare a mannequin to mix a number of fashions educated on our knowledge.

- Boosting: Boosting is an ensemble studying approach that focuses on choosing the misclassified knowledge to coach the fashions on.

Let’s dive deeper into every of those methods and see how we will use Python to coach these fashions on our dataset.

Bagging takes random samples of information, and makes use of studying algorithms and the imply to seek out bagging possibilities; also called bootstrap aggregating; it aggregates outcomes from a number of fashions to get one broad consequence.

This method entails:

- Splitting the unique dataset into a number of subsets with substitute.

- Develop base fashions for every of those subsets.

- Operating all fashions concurrently earlier than operating all predictions by means of to acquire ultimate predictions.

Scikit-learn gives us with the flexibility to implement each a BaggingClassifier and BaggingRegressor. A BaggingMetaEstimator identifies random subsets of an unique dataset to suit every base mannequin, then aggregates particular person base mannequin predictions?—?both by means of voting or averaging?—?right into a ultimate prediction by aggregating particular person base mannequin predictions into an combination prediction utilizing voting or averaging. This technique reduces variance by randomizing their building course of.

Let’s take an instance wherein we use the bagging estimator utilizing scikit study:

from sklearn.ensemble import BaggingClassifier

from sklearn.tree import DecisionTreeClassifier

bagging = BaggingClassifier(base_estimator=DecisionTreeClassifier(),n_estimators=10, max_samples=0.5, max_features=0.5)

The bagging classifier takes into consideration a number of parameters:

- base_estimator: The bottom mannequin used within the bagging method. Right here we use the choice tree classifier.

- n_estimators: The variety of estimators we’ll use within the bagging method.

- max_samples: The variety of samples that will probably be drawn from the coaching set for every base estimator.

- max_features: The variety of options that will probably be used to coach every base estimator.

Now we’ll match this classifier on the coaching set and rating it.

bagging.match(X_train, y_train)

bagging.rating(X_test,y_test)

We will do the identical for regression duties, the distinction will probably be that we are going to be utilizing regression estimators as a substitute.

from sklearn.ensemble import BaggingRegressor

bagging = BaggingRegressor(DecisionTreeRegressor())

bagging.match(X_train, y_train)

mannequin.rating(X_test,y_test)

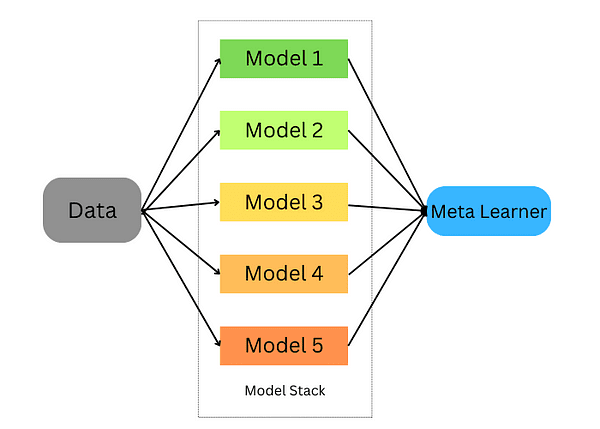

Stacking is a way for combining a number of estimators to be able to decrease their biases and produce correct predictions. Predictions from every estimator are then mixed and fed into an final prediction meta-model educated by means of cross-validation; stacking may be utilized to each classification and regression issues.

Stacking ensemble studying

Stacking happens within the following steps:

- Cut up the information right into a coaching and validation set

- Divide the coaching set into Okay folds

- Prepare a base mannequin on k-1 folds and make predictions on the k-th fold

- Repeat till you’ve gotten a prediction for every fold

- Match the bottom mannequin on the entire coaching set

- Use the mannequin to make predictions on the take a look at set

- Repeat steps 3–6 for different base fashions

- Use predictions from the take a look at set as options of a brand new mannequin (the meta mannequin)

- Make ultimate predictions on the take a look at set utilizing the meta-model

On this instance under, we start by creating two base classifiers (RandomForestClassifier and GradientBoostingClassifier) and one meta-classifier (LogisticRegression) and use Okay-fold cross-validation to make use of predictions from these classifiers on coaching knowledge (iris dataset) for enter options for our meta-classifier (LogisticRegression).

After utilizing Okay-fold cross-validation to make predictions from the bottom classifiers on take a look at knowledge units as enter options for our meta-classifier, predictions on take a look at units utilizing each units collectively and consider their accuracy towards their stacked ensemble counterparts.

# Load the dataset

knowledge = load_iris()

X, y = knowledge.knowledge, knowledge.goal

# Cut up the information into coaching and testing units

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Outline base classifiers

base_classifiers = [

RandomForestClassifier(n_estimators=100, random_state=42),

GradientBoostingClassifier(n_estimators=100, random_state=42)

]

# Outline a meta-classifier

meta_classifier = LogisticRegression()

# Create an array to carry the predictions from base classifiers

base_classifier_predictions = np.zeros((len(X_train), len(base_classifiers)))

# Carry out stacking utilizing Okay-fold cross-validation

kf = KFold(n_splits=5, shuffle=True, random_state=42)

for train_index, val_index in kf.break up(X_train):

train_fold, val_fold = X_train[train_index], X_train[val_index]

train_target, val_target = y_train[train_index], y_train[val_index]

for i, clf in enumerate(base_classifiers):

cloned_clf = clone(clf)

cloned_clf.match(train_fold, train_target)

base_classifier_predictions[val_index, i] = cloned_clf.predict(val_fold)

# Prepare the meta-classifier on base classifier predictions

meta_classifier.match(base_classifier_predictions, y_train)

# Make predictions utilizing the stacked ensemble

stacked_predictions = np.zeros((len(X_test), len(base_classifiers)))

for i, clf in enumerate(base_classifiers):

stacked_predictions[:, i] = clf.predict(X_test)

# Make ultimate predictions utilizing the meta-classifier

final_predictions = meta_classifier.predict(stacked_predictions)

# Consider the stacked ensemble's efficiency

accuracy = accuracy_score(y_test, final_predictions)

print(f"Stacked Ensemble Accuracy: {accuracy:.2f}")

Boosting is a machine studying ensemble approach that reduces bias and variance by turning weak learners into sturdy learners. These weak learners are utilized sequentially to the dataset; firstly by creating an preliminary mannequin and becoming it to the coaching set. As soon as errors from the primary mannequin have been recognized, one other mannequin is designed to right them.

There are well-liked algorithms and implementations for enhancing ensemble studying methods. Let’s discover probably the most well-known ones.

6.1. AdaBoost

AdaBoost is an efficient ensemble studying approach, that employs weak learners sequentially for coaching functions. Every iteration prioritizes incorrect predictions whereas reducing weight assigned to accurately predicted cases; this strategic emphasis on difficult observations compels AdaBoost to grow to be more and more correct over time, with its final prediction decided by aggregating majority votes or weighted sum of its weak learners.

AdaBoost is a flexible algorithm appropriate for each regression and classification duties, however right here we give attention to its utility to classification issues utilizing Scikit-learn. Let’s take a look at how we will use it for classification duties within the instance under:

from sklearn.ensemble import AdaBoostClassifier

mannequin = AdaBoostClassifier(n_estimators=100)

mannequin.match(X_train, y_train)

mannequin.rating(X_test,y_test)

On this instance, we used the AdaBoostClassifier from scikit study and set the n_estimators to 100. The default study is a choice tree and you’ll change it. Along with this, the parameters of the choice tree may be tuned.

2. EXtreme Gradient Boosting (XGBoost)

eXtreme Gradient Boosting or is extra popularly often known as XGBoost, is likely one of the greatest implementations of boosting ensemble learners because of its parallel computations which makes it very optimized to run on a single laptop. XGBoost is on the market to make use of by means of the xgboost package deal developed by the machine studying neighborhood.

import xgboost as xgb

params = {"goal":"binary:logistic",'colsample_bytree': 0.3,'learning_rate': 0.1,

'max_depth': 5, 'alpha': 10}

mannequin = xgb.XGBClassifier(**params)

mannequin.match(X_train, y_train)

mannequin.match(X_train, y_train)

mannequin.rating(X_test,y_test)

3. LightGBM

LightGBM is one other gradient-boosting algorithm that’s based mostly on tree studying. Nonetheless, it’s in contrast to different tree-based algorithms in that it makes use of leaf-wise tree development which makes it converge sooner.

Leaf-wise tree development / Picture by LightGBM

Within the instance under we’ll apply LightGBM to a binary classification drawback:

import lightgbm as lgb

lgb_train = lgb.Dataset(X_train, y_train)

lgb_eval = lgb.Dataset(X_test, y_test, reference=lgb_train)

params = {'boosting_type': 'gbdt',

'goal': 'binary',

'num_leaves': 40,

'learning_rate': 0.1,

'feature_fraction': 0.9

}

gbm = lgb.prepare(params,

lgb_train,

num_boost_round=200,

valid_sets=[lgb_train, lgb_eval],

valid_names=['train','valid'],

)

Ensemble studying and random forests are highly effective machine studying fashions which might be all the time utilized by machine studying practitioners and knowledge scientists. On this article, we lined the fundamental instinct behind them, when to make use of them, and at last, we lined the preferred algorithms of them and tips on how to use them in Python.

Youssef Rafaat is a pc imaginative and prescient researcher & knowledge scientist. His analysis focuses on growing real-time laptop imaginative and prescient algorithms for healthcare purposes. He additionally labored as a knowledge scientist for greater than 3 years within the advertising and marketing, finance, and healthcare area.